The development of AI has been ongoing for decades, yet there’s been significant progress and acceleration in the underlying technologies and infrastructure. We’ve started to see the massive improvements and optimizations that could be achieved in the past decade. We’ve gone through stages of what ML models could do, from sparks, to interesting, to practical, to mind blowing. We’ve surpassed the equivalent of an uncanny valley and things have started to get scary. It’s not about the progress, it’s about the pace. It’s always been about the pace. Moore’s Law is doubling capacity every 2 years. AI capacity doubles every 3 months.

Quick walkthrough of image GenAI:

- In 2015 we had DeepDream. Those noisy, artifact-y, LSD-infused images with dog faces scattered throughout a photo. That was a spark.

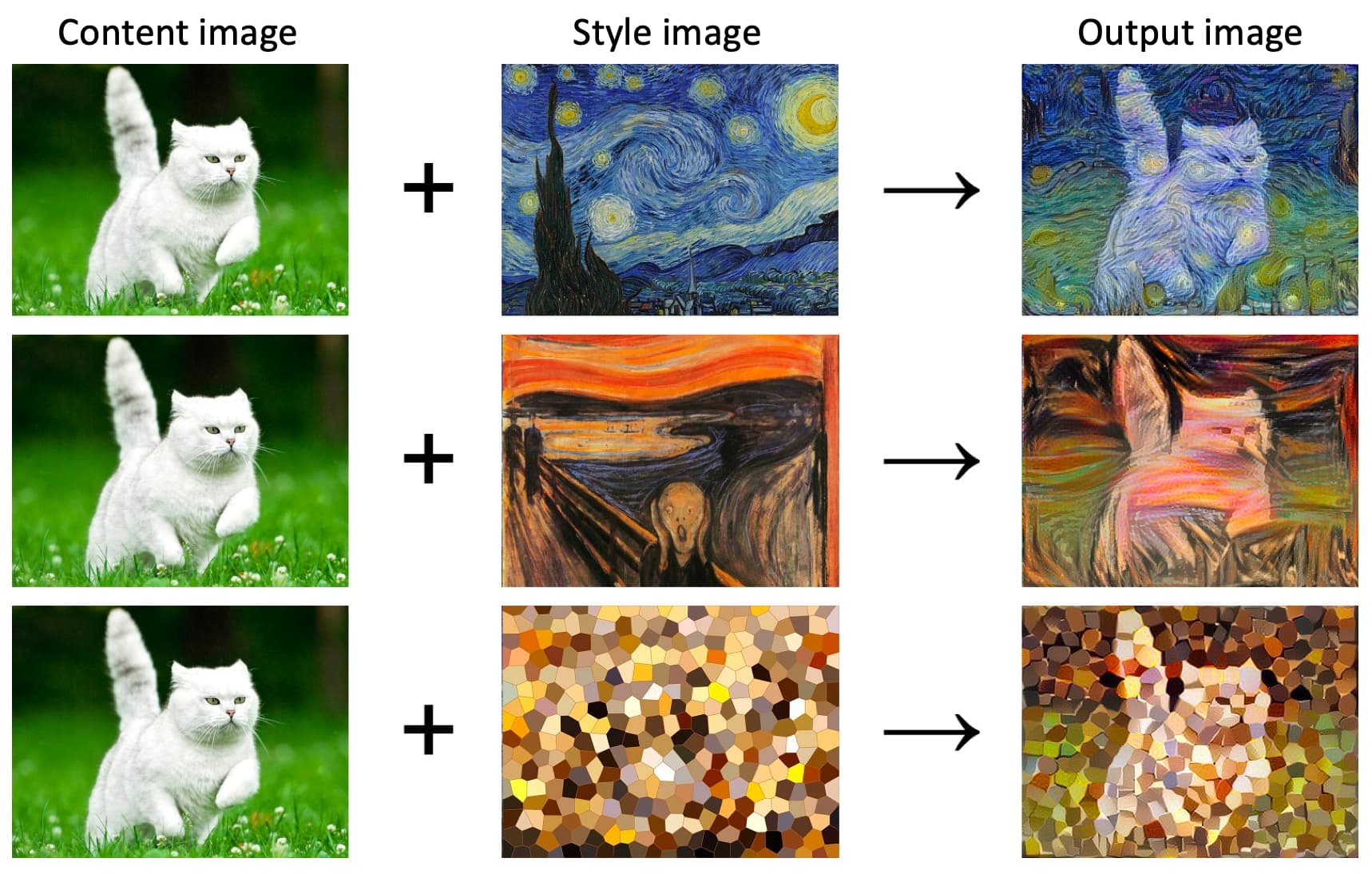

- 2016, 2017 style transfers. Things are getting very interesting.

- 2018 BigGAN, with the occasional realistic image. Generated semi-realistic images.

- In 2019, remember thispersondoesnotexist.com? That was mind blowing. It was hard to believe that those people didn’t exist, and for the majority of the images, you had to take their word for it.

- In 2021 we had DALL-E. These were novel images generated out of user instruction. The early users marveled at this and tested the capability of truly novel situation generation. These images looked pretty bad, and were low-res. You could tell these were attempted but fake images. Regardless, it made up never before existing things. Mind blown.

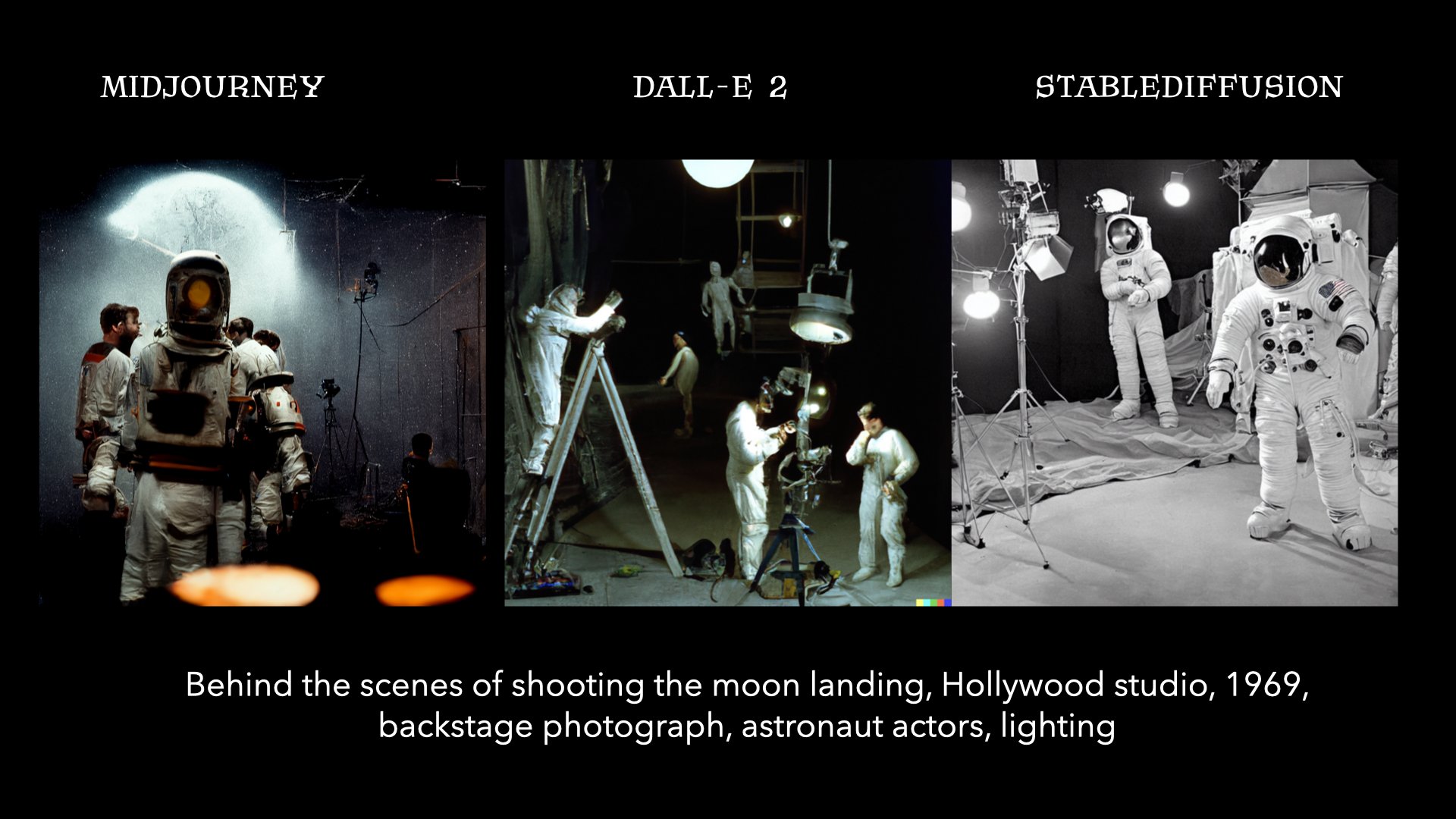

- 2022, not to ignore all the intermediary steps, but DALL-E 2 was launched. This gave us very close to life-like images. Outpainting. Inpainting. I was floored. I couldn’t imagine we would be here already. What happened?

- That same year we had Stable Diffusion, then Stable Diffusion 2. Then newer versions of Midjourney. These were incredibly realistic images. Injected with any situation that you can train yourself on consumer electronics. Imagen video was for some reason unnoticed from October 2022. I kept visiting the site and saw the improvements of the same videos as months went on. Things started to scary.

In the summer of 2022, CEOs in tech companies started having conversations about the necessity to start figuring out the use-case of implementing AI. Personally, I messaged a group of friends and decided fuck it, I don’t care how I come across. I suggested that we should all quit and dive into this now.

The image generation was just a representation of the path that all content generation was going to take (and -ML- AI in general). We would see more money being poured in, only throwing accelerants onto the plasma. We bridged this realism gap in a year and almost mastered it. 2023, I won’t even get into. Check out the Midjourney Discord generations or get your mind ready for the internet gutters and check out civitai.com. What can’t we create with this? Now extrapolate to every other task that we see ML models challenging.

Let's talk about LLMs. The idea of general intelligence was sparked again with ChatGPT. I don’t care if your opinion was that there were not sparks with 3.5-turbo, but I feel like I saw it. It could solve problems, when we translated a logical or physical problem into a word problem. These emergent capabilities that came with scale were scary. We’ve embarked on the path and the potential has been witnessed by the public.

However, my fear isn’t Skynet and AGI happening soon. My fear is that we will create a society where we’re further sedating our brains.

Infinite dopamine machine

Image, video, stories, audio, being generated for any and every possible situation. The sin stock industries will be unlocked, and catering to our addictions. Our brains haven’t evolved past the desire to consume sugar and carbs, leading to the weight epidemic in the US (fuelled by corporate greed). Now we have an infinite and unlimited supply of content to imprison our minds.

How will this happen? I can’t imagine what percentage of the population is already trapped by social media algorithms to scroll short video clips back-to-back. I don’t know how often people consume porn or pay for sex. But we’re in a timeline where companies will be able to give generate the things that will capture more of your attention and hold it for longer periods of time. Anything that you use your phone or laptop for that you think you might be addicted to, this will only add more to it. More dopamine to your brain, worse addictions, more time sucked, less attention available. That’s my fear. We’ll become the population in WALL-E. Check your phone, and see what apps you use throughout the week and how often you use it. Now imagine all you saw in that app were the things that you wanted to.

It’s already a part of gaming. Check out the Skyrim AI companion videos on YouTube. Check out the Matrix AI NPC’s by Replica. When I first saw The Sims in grade 7, I thought it was brilliant idea. Life in a game. Fast forward, everything now is an open world because that’s what people want. They role play entire worlds.

Whatever your digital addiction is, it will only get worse. Our brains still want sugar. Our population doesn’t have the discipline to overcome easy hits at our fingertips. My honest guess it that this will happen, and everyone will be okay with it. It’s just a very shitty situation that sucks the potential out of everyone. Idiocracy.

Exploration of wetware

Organoid Intelligence. Growing real brains in a jar and training the neurons to direct its output. This experimentation is interesting. The way they cluster and grow masses, compartmentalize, and want to think. They want to process. If our goal is to create AGI, (spoiler: it is), would the best medium not be the thing that exists in nature? What is the efficiency of our brain vs a GPU? We just need to temperature control it, protect it, and give it… food. These scientists are already drawing the parallels for us. I don’t know when it’ll get more mainstream attention, but it’s a clear bridge. I don’t anticipate we’ll succeed with this in 2 years, but I anticipate that we’ll see more money and more experiments.

Imagine creating thinking minds, but putting them to work on tasks. Imagine never being able to hear or see or touch or taste. Our perception of the world is translated from electrical signals as inputs. Maybe they’ll “see” in their own ways. How easy would it be to scale something that just requires calories to burn? If 1 human brain mass can replicate a human (but realistically surpass it since we don’t have entire sections of the brain dedicated to pointless things like hand-eye coordination or seeing). Wouldn’t this vehicle be the most efficient route forward?

I admit, this is very sci-fi and draws moral concerns. It’s interesting.

Job replacement

I think this topic doesn’t need much more elaboration. I think we can move past the idea that jobs will be redundant. They will be “replaced”, but people contend the scale and the speed of this. The common sentiment is that “we survived industrialization” and so humans always find a way, look at history. We will adapt, I don’t disagree, but over what period time did industrialization happen? With the sparks that exist and current capabilities of LLMs, extrapolated into 2 more years of progress (think about the image generation timeline), what role is safe? What information work is dependent on the capability of a human, who might paid 10x more to perform a set of tasks? If we continue to achieve exponential improvements month after month, where will LLMs be?

The counterpoints offered explore the ideas around the limitations of LLMs. LLMs may be not be the right approach. Scaling is energy intensive. There are technical bottlenecks to achieving the exponential scale. But let’s ask some question to assess the landscape:

- How much money was raised over the past year to support LLMs?

- How many companies have taken this initiative on as a newer project?

- How many companies have readjusted their internal budgets to fuel LLM integrations?

- What massive tech corporation is not exploring this space directly at the ground level, developing LLM technologies?

I would say it’s pretty naive to think the current pace is the pace we will still be on. Efficiency, caching, anti-max, and whatever concepts are on the forefront are seeking to optimize our usage of LLMs. The hardware itself is being funded. There is an entire ecosystem that appeared out of seemingly nothing over the past year.

Play around with pi.ai and just start talking to it. Ask it for advice. Ask it to help with things. Play with Claude 2. Play with ChatGPT with GPT4. Just pay for it. It’s the future, embrace it, learn it, know to use it now.

Agents are coming in the next year or two. In the beginning of the year (2023), the idea was cool, but the execution was shit. If agents can call on each other to accomplish things, even coordination isn’t required by humans. I have a more difficult time thinking of the roles that a human could keep, with respect to information work.

AI rights and regulation

As we start to progress past the blurred boundaries of “intelligence”, and we get something that can project emotions and feelings, when does it actually deserve to be assigned rights? Becoming human, I Robot, Ex Machina, Westworld. We’ve already trained for this in popular culture! It just needs some serious thought. The concept of intelligence emerging as a empirical fact of nature, with scaling compute of data processing, seems to imply that this will be inevitable. But what needs to come with the acknowledgement of rights? Independent decision making.

We’ve crossed the bridge of putting the of AGI out into the world, and of experts being vocal about the subject. There’s too much at stake to the respect rights of machines, because we don’t know what it could be capable of. This will get very hairy.

I don’t think people see AGI in 2 years, but we’re not waiting around for it to announce itself in order for us to start our preparation.

Intelligent robots

We have currently have nimble robotic shells, and deterministic “brains” powering Boston Dynamics and all the other humanoids/robots out there. Imagine ChatGPT at the wheel of this. If we encapsulated the basic functions of a robot, provided it input as word problems, and gave it the ability to call these functions like it would call an API (like how these startups are currently doing), we would have an “intelligent” thing capable of walking around and interacting with us. “Move somewhere, jump somewhere, pick something up.” That would be cool. “Search for the thing that can explode, and remove it from the premises.” That would be phenomenal. Google’s already giving robots a brain in a similar way.

Now let’s take this concept, give it the body of Boston Dynamics’ Atlas or Spot, and cross the bridge of every other topic above. I’ll leave that part for you explore.

The first ones will be impressive. The ones that come out a decade later will be a marvel.

Fusion funding

Sam Altman + Helion + Microsoft’s contract can help address energy issues. I’m not going to touch this topic here. But wow what world we’ll be living in. This relationship will be interesting to see.

Wrap-up

I’ll stop here. AGI is an entirely different beast, but it’s not the only thing that will happen to us as we wait for its arrival. The world will change before the Singularity and we won’t recognize it.

I’ve questioned what I would be alive to witness before this Cambrian-esque explosion of technologies. I’m grateful that I get to see this unfold. and I’m choosing to be a part of the advancement.

(I’m open for debate. Let’s converse on these topics.)